From Social-Democracy to Social-Liberalism: uncertainty, markets, and economic planning

This is a second blog post in a series of posts about my switch from Social-Democracy to Social-Liberalism.

The last post was about specific things I did not believe, to try and dispel misconceptions about why I chose to identify as “liberal”. I tried to glance over several topics — socialism, private property, democracy, meritocracy.

Now comes another article, which plays a big role in my switch: the realization markets are not inefficient, and randomness and uncertainty are not always counterproductive.

The roots of anti-market beliefs

There are several attempts to map anti-market sentiments, and there is one group of people that always stands out in my experience. These are the STEM graduates — the engineers, mathematicians, physicists — who argue econometrics and macro-ecomomics is “baby science” or even “fake science”, and any serious scientist should reject economic research because it is full of unserious grifters.

One such person I’ve found to hold this belief is Rémi Louf. This man has a degree from ENS ULM, a STEM research school that is arguably more selective than MIT. To add to this, he also happens to have a double degree from Oxford, which isn’t very surprising, because once you’ve done well at ENS, Oxford should be a piece of cake.

Not to say Rémi is wrong here — I barely know him. He’s very smart, is a leading contributor to critical projects surrounding LLMs and Bayesian computation, and deserves recognition.

I can’t blame him — I used to be like him, in fact. And this post explains what made me understand it was wrong.

“God does not play dice”

This is a sentence Einstein said to Niels Bohr. The original sentence goes (in german)

Jedenfalls bin ich überzeugt, daß der nicht würfelt

— “I, at any rate, am convinced god does not play dice”

This was his opinion on the famous Copenhagen Interpretation of Quantum Mechanics — which was championed by Niels Bohr as well as others. You have to put this in the context of 20th century theoretical physics. The most powerful theory then was built upon Maxwell’s equations, and the entire predictions from the theory could be obtained from axioms such as

$$ \begin{cases} \nabla\cdot\mathbf{E} = \dfrac{\rho}{\varepsilon_0}&\\ \nabla\cdot\mathbf{B} = 0& \end{cases}$$

You have to understand these quantities here, \(\mathbf{E},\mathbf{B}\) are profoundly deterministic quantities. They describe the vectors fields — magnetic and electric — which allows you to know precisely how any electric charge would be impacted at any point of space. This is 100% deterministic — 0 uncertainty here.

Newton’s Physics are similar — you have position \(\mathbf{x}\), velocity \(\dot{\mathbf{x}}\) and acceleration \(\ddot{\mathbf{x}}\) — and so forth. Any manipulation you have on these objects are deterministic functions of the input.

The danish kids were claiming no such thing though — sadly for our traditionalists. They argued the correct unit for modelling was a \(\Psi\) — a complex-valued function — which not only did not make any direct sense, but whose only intepretation was through \(\Psi^\dagger\Psi = \lvert\Psi\rvert^2\) which was a so-called density function.

:max_bytes(150000):strip_icc()/physicist-niels-bohr-514891628-5a6e41193418c60036a1ee35.jpg)

Roughly, this means that a system does not act on quantities which are deterministic, but merely change the probability that the system falls in a given state. This was the death of determinism, all in favor of efficiency and predictive power — you could call that “the first instance of neoliberalism in theoretical physics”.

Cellular networks are literally neoliberalism

Another huge blow to the determistic tradition in science is none other than the very reason you’re able to read this article. Claude Shannon’s General Theory of Communication rests upon one key revolutionary idea: the fact that we have to accept things are not 100% sure, and some data will be lost.

Shannon’s theory, which he set out to build at AT&T’s Bell Labs (Horror — a private company 😱) can be summed up as follows:

Suppose we accept that we will fail and lose data packets at a non-zero rate. Call that rate R. Can we design systems which are preconditioned on the idea that real-life conditions are probabilistic in nature, and thus affect this error probability, but design them in a way in which R goes to zero as we increase some design components ?

This framework — which assumes you cannot understand exactly what goes on but only aim to quantify statistical properties of the transmission channel, turns out to be much, much better than deterministic theories.

This is perhaps another major success of “scientific neoliberalism” — it reinforces accepting uncertainty and “irrationality”, not having everything under control, can actually lead to better outcomes.

The way Shannon designed his theory is exactly how I think about markets. Markets are huge bayesian networks which constantly update their marginal likelihoods in reaction to real-time events. It is a pure stochastic machine. And it works better than the alternative.

Even the soviets agree markets are better !

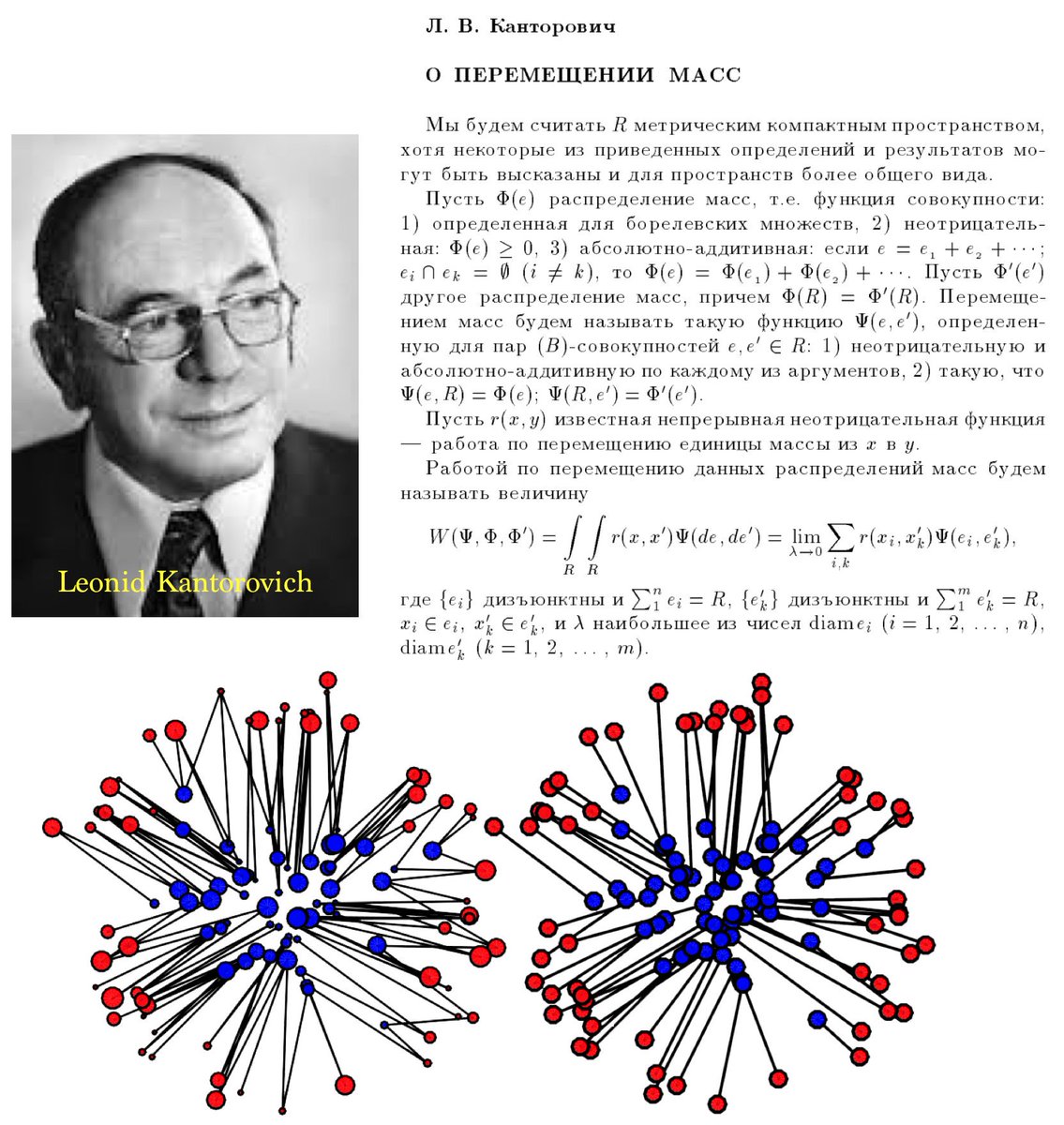

One of the most funny moments in scientific history is arguably Kantorovich’s major contributions to Optimal Transport Theory. To give a bit of context, Optimal Transport is what the Soviets used to do economic planning. It is a series of mathematical tools to distribute goods from a source domain \(\mathcal{X}\) (say, your factories), to a target domain \(\mathcal{Y}\) — the consumers. The theory assumes moving one point from source position \(x\) to a target position of \(y\) incurs a cost of \(c(x,y)\) which is a real number.

The so-called “Kantorovich formulation” goes as follows: assume you have fixed marginals \(\mu\,,\,\nu\) which you cannot change. These are the locations of your factories over the country (you can't change that), and the houses in which people live (you cannot change this either) or their household wealth. What you can play with is what Kantorovich calls a “coupling” \(\gamma\in\Gamma(\mathcal{X}, \mathcal{Y})\), which is just a joint-density, or a “probabilistic mapping” from source to target.

Anyway, the Kantorovich Formulation is nothing groundbreaking. It says the objective is to minimize the overall cost of moving all the sources to targets, i.e. the integral problem of minimizing

$$\int_\mathcal{X}\int_\mathcal{Y}\,c(x,y)\mathrm{d}\gamma(x,y)$$

over the set of all potential couplings \(\gamma\in\Gamma(\mathcal{X}, \mathcal{Y}) \). What was groundbreaking though, is the dual formulation he proved must hold for the solution to be optimal. He proved it is

$$\sup \left\{ \int_\mathcal{X} \varphi (x) \, \mathrm{d} \mu (x) + \int_\mathcal{Y} \psi (y) \, \mathrm{d} \nu (y)\right\}$$

Where the sole condition on the mappings is \(\varphi (x) + \psi (y) \leq c(x, y)\).

Others will explain this better than I do, but above \(\varphi (x)\) represents supply and \(\psi (y)\) represents demand, and the fact \(\gamma(x,y) = \varphi (x) + \psi (y) \) suggests the optimal system must separate demand management from supply management. It essentially implies there shouldn't be a single actor doing both functions, which means planning is strictly inferior at solving allocation problems.

This, which is probably one of the strongest theoretical pieces of evidence in favor of markets, was proven by a literal soviet mathematician trying to make central planning more efficient. What a splendid victory of neoliberalism once again.

What about the future ? Will neoliberalism stop ?

Well, I have bad news. Have you heard about the Artificial Intelligence revolution ? All these humans doing worse than machines at increasingly many tasks ? Well all the algorithms which these machines use are inherently probabilistic. None of them assumes determinism. In fact, the entire theory is built around randomness.

Arguably the entire field of Big Data and Machine Learning is about leveraging the Law of Large Numbers. It is neoliberalism on steroids, I’m afraid. Even more so because private companies own most of the compute and concentrate most of the skills within the industry.

There has never been a time in history where believing stochastic models are inferior could be more wrong. And perhaps economics as a science was visionary in embracing this early.

The teachings of “scientific neoliberalism”

The people who argue markets are random and uncertain, and because they are it follows they are less efficient at solving allocation problems — these people are most certainly fools. I know because I was one.

I wanted to believe that deterministic rules do better than random systems. It is wrong. I wanted to believe clear-cut regulation — banning stuff, price controls, nationalization, etc — would obviously yield better outcomes.

But it couldn’t be more wrong. And this article is just the surface. The more you learn, the more you realize it is pure stupidity.